Pipeline Protocols

rules, roles and heuristics governing releases

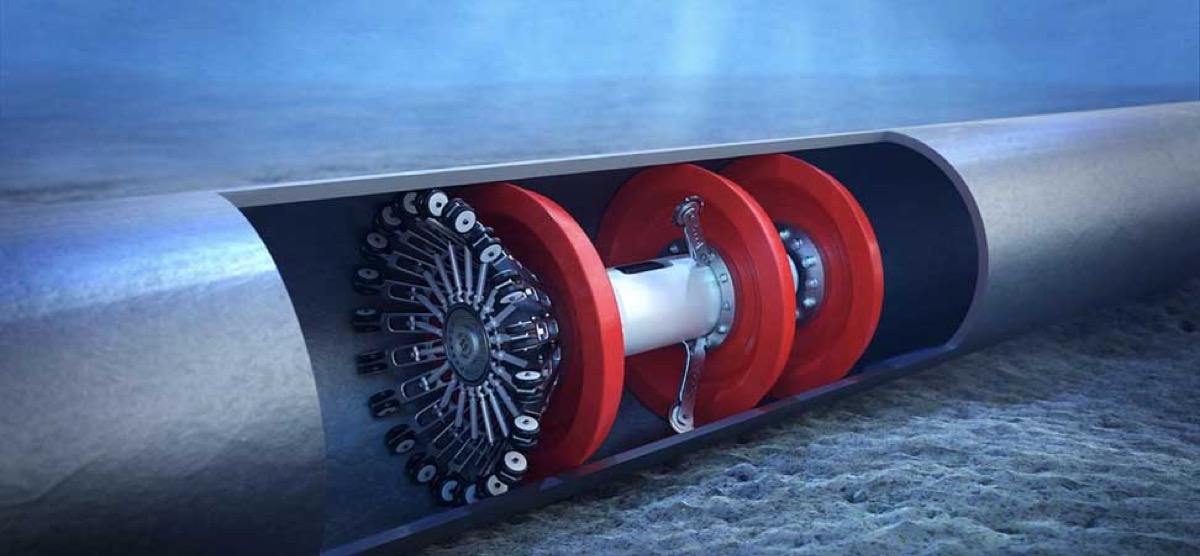

A pipeline is a metaphor for getting things done, and it’s easy to understand why when you think about an actual pipeline.

If you think of a water pipe: a big tube with a fluid getting pushed through. Open the faucet, out comes the water. Turn the faucet off, and the water just sits there, waiting till you need some more. Simple. That’s what I thought before I worked on software for oil pipelines.

A petroleum pipeline is a bit different. The same oil pipeline is often used for different products. For example, a batch of gasoline can be followed by a batch of jet fuel. Pipeline operators can just push one product in after the previous one; the guy at the other end of the line reads the volume meter and, when the meter indicates the batch is complete, he stops the flow by closing a valve, then switches the line to a different storage container before reopening the line. A bit of gasoline in the jet fuel isn’t a problem, but he tries not to get the different products mixed up too much.

To mitigate the risk of mixing products, batches are separated with a plug, called a pig. The “pig” plugs the line at destination, so there’s no mixing. A special kind of pig is used to scrap off deposits which accumulate along the inside of the pipeline.

They’re called pigs because the first pipeline cleaning plugs used back in the 1870’s were hay bales wrapped with barbed wire, and they squealed like pigs as they scraped along the inside of the pipelines.

My client had us working on software to control the batches, to verify the volume of the batch delivered, and to certify that different petroleum products were not getting mixed.

We had a release manager and release protocols, but Continuous Delivery didn’t become a mainstream software practice until as recently as 2010, and when I starting reading about software delivery practices, I immediately thought about the similarities of the batching processes used in the petroleum pipeline industry.

Pipeline operators are trying to avoid mixing batches and we’re trying to avoid is letting our new code get out there into production before we know that the batch is good. Our batches are Pull Requests. Like pipeline operators, we also use plugs in our pipelines to make sure the code stops at a specified stage for inspection, to make sure the batch is good, before we let it move on down the line. Our pigs are release protocols, imaginary objects that plug our virtual pipelines. Release protocols are the rules, roles and heuristics that we apply to determine if a plug can be removed so that the code can be pulled into the next stage. When we set up a systematic why of doing this, it’s called “Continuous Delivery”.

Locking in Gains

A definition for Continuous Delivery from Jez Humble’s book is “the ability to get changes of all types - including new features, configuration, bug fixes, and experiments - into production, safely and quickly in a sustainable way. Protocols and release gates are the pipeline metadata that give delivery systems meaning.

The only way we gain ground is by delivering. Your organization gains by getting the work out the door so they can bill for it, and the customer gains because it is only on delivery that they can leverage the value that they’re paying for.

Software delivery is not like an oil pipeline where you just keep pushing batching down the line, one after another. Our products are complex systems and what constitutes acceptable quality has many more ambiguities. There can a lot of ambiguity in what constitutes quality in software, and ambiguity means risk.

Agile practice is about mitigating risk through iterative development, and delivery practice is about mitigating risk through periodic inspection: locking in the gains of each iteration; insuring that when it comes time to deliver, you can be confident that the customer will actually get the values that you’ve been promising.

Delivery practice is much bigger than the automation work that people refer to as “devops”, it’s not something you can just relegate to a bunch of build-automation docker images, knobs and dials and tangled wires back in the engine room. It’s a process that includes individuals in non-technical roles. It has a lot to do with how team members collaborate. What makes an organization grow stronger is not so much the value of the delivered product, as the value of what we learn through the process of delivery. You could say that delivering is about survival, and what we learn along the way is how we stay competitive. Delivery practice is as much about learning through feedback as it is about actually getting the work out the door.

Our goal in this talk is not to give you a “state of the art” report on the latest tools and technology used by Devops teams, but rather to bring into focus the role that Delivery Practice plays in building Quality, Productivity and ultimately, bringing Competitive Position, to the teams and organizations that take it seriously.

A few years ago I had the opportunity to visit Japan and like any tourist, I visited a number of shrines. I also had the opportunity to visit the Toyota museum in Nagoya, Japan; a sort of shrine to Lean practice. The curators went beyond creating a mere showcase of inventions to tell a story of innovation as a process.

In 19th Century Japan, when manually operated wooden shuttlecock looms were the cornerstone of the local economy, Sakichi Toyoda was an unusually observant child who grew up in a village of weavers. He watched the workflow of the weaver and the loom. His parents surely thought there was something wrong with the quite concentration of the young child. But observation became innovation, as one day he showed how to reconfigure the the loom so that could be operated with just one hand, resulting in a significant improvement to productivity.

Sakichi Toyoda obtained his first patent in 1890, and in the following years, he iterated relentlessly, including plans for the first automatic shuttle-change loom in 1903, one in a long and prodigious series of advancements that competitors sought to copy. When his colleagues asked what to do about patent violations, Toyoda famously said:

“The thieves may be able to follow the design plans and produce a loom. But we are modifying and improving our looms every day. They do not have the expertise gained from the failures it took to produce the original. We need not be concerned. We need only continue as always, making our improvements.”

In the decades that followed, the Toyoda Automated Loom company became a prosperous enterprise. With Ford motor cars transforming the economics of transportation, Sakashi Toyoda set his sites on auto manufacturing, a much more ambitious undertaking. He sent his son Kiichiro abroad to sell their portfolio of loom patents, closing a deal with Platt Brothers in the United Kingdom for their Automatic Loom patent, raising capital for an automotive startup in an industry already dominated American and European manufacturers. Despite extensive metal casting experience that they brought to the project, the defect rate of their early engine blocks was very high and the success of the venture was in no way a sure thing.

One can imagine these guys in their workshop in Nagoya burning through their capital surrounded by ruined engine blocks, wondering if they should have just stuck to the loom business. So just a spoiler alert for those of you who haven’t seen the movie: these guys did do on to make a few good cars.

Toyota produced trucks through the war years, and afterwards faced restarting with no market, no money and, surprisingly for Japan, a workforce out on strike. It was, to say the least, a difficult time.

During the post war period of rebuilding, there was a line manager from the Toyoda Automatic Loom Company named Taiichi Ohno who transferred into Toyota Motors as a shop supervisor. He had been a careful student of American auto manufacturing practices from the beginning, and when he had the responsibility to set up production lines, he made a number critical innovations to what was considered manufacturing best practice at the time, including Just in Time Delivery, which introduced a new paradigm which which eventually became the model for software delivery practices today.

a production line that never stopped was either extremely good or extremely bad.

— Taiichi Ohno

To understand the significance of Taiichi Ohno’s contribution, we need to take a step back to look at the thinking he departed from, the assembly line manufacturing process pioneered by Ford, based on something called Theory of Scientific Management promulgated by efficiency expert Fredrick Winsdlow Taylor, a contemporary of Sakichi Toyoda.

From the days of the Ford Model-T, American auto workers were trained to do just one task, and to do it in the least time possible. They weren’t being paid to think and management made sure they knew it. Efficiency experts trained in Taylor’s system would study each step in assembly process, such as attaching some part with bolts, and proscribe where the bolts and the parts should be at the start of the operation, which bolt to put in first and which last, how many turns of the wrench were required to tighten each bolt, and the total time of the operation. That time was then built into the physical assembly line, so that it was entirely non-negotiable with the worker. No nose scratching or pee breaks permitted.

Lest the be any doubt about Taylor’s intent, he declared that “Hardly a competent workman can be found who does not devote a considerable amount of time to studying just how slowly he can work and still convince his employer that he is going at a good pace”

Taylor’s system of efficiency succeeded in crushing the competition from hand-craft assembly of machines and within a generation, the Ford motor company style assembly lines were in widespread use, with the economic benefits being quickly normalized. The collateral damage to the workforce resulting by continued strict adherence to Taylor’s management methods were counter productive, but fixed time as the measure of efficiency continued to be the standard of practice long after it had outlived its usefulness. By the 1970’s the long shadow that Taylorism cast over American manufacturing was a big factor contributing to poor quality and adversarial labor relations that brought American car manufacturing to the brink of bankruptcy; for Chrysler, it was over the edge.

The process pioneered by Taiichi Ohno at Toyota was eventually championed in the West by W. Edwards Deming, who was brought as a management consultant to work with Japanese manufacturers during the post war reconstruction period.

As a physicist, management consultant and all-around great guy, Deming was perhaps the most outstanding catalyst for the science of productivity of the 20th century, merging the culture of Toyota with the American tradition of invention and innovation we identify perhaps with Benjamin Franklin. Deming hardened the science of innovation with the discipline of statistical analysis. The core of what Deming taught in Japan he attributed to the quality control pioneer from Bell Labs, Walter A Shewhart.

Shewhart taught the concept of how to bring a process into statistical control, and then to manage quality as matter of continuous improvement rather that adherence to specifications. Early Japanese auto imports to the US were cheap and low quality but the fusion of Deming, Taiichi Ohno and other contributors produced what came to be known as the Toyota Production System, under which quality improved relentlessly.

Now, Americans have pride in nothing so much as their cars, and they responded to the Japanese insult of better and cheaper cars in the true spirit of the free market: by imposing import tariffs on Japanese cars, to make them more expensive. This forced Toyota into a joint venture with GM.

The first attempt to run the Toyota Production System in the US was in 1984 as a Toyota-GM joint venture called NUMMI, the New United Motor Manufacturing Incorporated, at the shuttered GM factory in Fremont, California, the plant with the highest defect rate of any GM plant in the 1970’s, and that’s saying a lot. They were producing the Chevy Celebrity and Olds Cutlas Ciera, nice cars, other than how frequently they were in the shop for repairs.

GM had spent a decade fighting with the United Auto Workers before they could finally get out of their contract with the Union at Freemont, so when Toyota insisted on retaining the same workforce, the GM executives thought the Japanese were simply naive, warning the incoming Toyota team that they simply didn’t understand just how bad these American union workers were. The part that the GM guys just didn’t get, what the Toyota executives understood, was what Deming and Shewhart had taught. That quality is almost always a question of process, a management responsibility. They insisted on continuing with the same workforce that GM blamed for their quality problems.

Eighty-five percent of the reasons for failure are deficiencies in the systems and process rather than the employee.

— W. Edwards Deming

Toyota took United Auto Workers laid off from the failed Freemont plant to Nagoya to show them how the line is run in a Toyota plant. One of Taiichi Ohno’s innovations over what was considered to be assembly line best practices at the time was to install pull cords in easy reach at every work station: one pull would set an amber light to call over a manager, if the problem couldn’t be resolved in real time, a second pull on the cord stops the line.

The Andon cord is a feedback mechanism which makes it explicit that quality is management’s top priority, that everyone down to the guy sweeping the shop floor has the authority to require a manager to come and observe some aspect of work in progress. Under the Toyota system, the managers job is not so much to tell people what to do, but to respond to calls to look at circumstances on the line in real time, and make things right.

After seeing the Toyota Production System in action, one UAW member put it this way:

“I can’t remember any time in my working life where anybody asked for my ideas to solve the problem. There’s nobody to pull you out at General Motors, so you’re going to let something go. Hundreds of misassembled cars. Never stop the line.”

That was GM before the TPS.

Recognizing the value of favoring a feedback mechnanism, Taiichi Ohno rejected the notion of fixed-time as a regulator of the assembly line. In it’s place, he introduced a buffering system with a signaling mechanism which allowed for variable time at a workstation without adversely affecting the flow of the line. The signaling mechanism is called Kan Ban, which just means “Signal Card” in Japanese.

Kanban refers to a pull based buffer system of workflow management regulated by a signaling system. Implementing real-time feedback and using buffers in place of fixed time to regulate work laid the ground for shortening supply chains so that improvements identified from real-time feedback could be quickly incorporated into the supply chain, reducing the cost of having to scrape defective work already in inventory and increasing quality through continual improvement. This aspect of Taiichi Ohon’s contribution is know as Just-in-Time Delivery.

Deming’s books brought the revolutionary concept of just-in-time delivery and other Toyota Production System innovations to the attenion of American manufacturers, and his 1982’s book Quality, Productivity and Competitive Position provides perspective on the state of what was to become known as “Lean theory”, as it began to influence software thought leaders.

Recognition of Deming’s role is sparking a revolution in manufacturing productivity was generally limited to management circles at the time when Ronald Regan awarded him the National Medal of Technology:

These are the dreamers, the builders, the men and women who are the heroes of the modern age.

— Ronald Regan, 25 June 1987

The publicity generated by the award sparked a series of articles and interviews with Deming, then 87 years old. Seeing Regan’s speech, news editors around the country asked, “who is the Deming guy? Go get me and interview with him!” His unpretentious optimism and vitality resonated with people working in spheres beyond manufacturing, including the rapidly evolving field of software engineering.

The purpose of the Toyota Production System practice of Just In Time delivery was mostly misinterpreted by American bean-counters as merely a technique to reduce inventory carrying costs.

Software thought leaders recognized the essential value of establishing a systematic way of quickly capturing and correcting defects, and restated this Quality Assurance principle as a new standard of practice called Continuous Integration, popularized in 1999 by Kent Beck’s “Extreme Programming Explained”.

Finding and fixing defects in real time seems like common sense but it brought radical improvements on the assembly line, and it brought about a transformation in how we build and deliver software.

Identifying defects sooner saves a lot of time and money; learning from break-fix cycles, exploring root cause, and taking action to prevent defects of the same type from recurring. But this kind of feedback mechanism can yield additional benefits, such as increased throughput and improved fitness of product.

Think about what Sakichi Toyoda said, “…They do not have the expertise gained from the failures it took to produce the original.”

The delivery pipeline is where we can earn the expertise that, over time, sets us apart from our competitors.

The product that you manage to ship may pay the bills but what you learn in the process is what strengthens the organization. We can only learn from problems when we recognize them as such. The motto in a GM plant was “never stop the line”. In a Toyota plant, the andon cord is pulled thousands of times a day. Not every pull represents a break fix, but each one represents a feedback cycle.

Feedback is a signal that calls for a response. The response can be to catch an error early, or an observation about how something might be improved, or simply to resolve uncertainty. If there is one thing that software team managers need the most, it is to get out of meetings and pay attention to the specific circumstances of work in progress, with realtime signaling from their value stream to indicate where to focus their attention.

You can think of pipelines as the structure that allow us to install our signaling mechanisms. Feedback from test cases running in a stable pipeline stage is what allows us to develop that necessary expertise, learning through observation and experimentation, continually improving the flow of valuable work through the pipeline to meet the goal of satisfying the customer.

Delivery is the boundary between our promises and actually satisfying the customer. Each stage of delivery is an opportunity to verify that the development teams’s understanding of the work is in alignment with the customer’s expectations. Managing customer expectations is hard enough without introducing ambiguities about test environments and application versions. We use pipelines to stabilize things enough to get meaningful feedback from the customer, but before we can do that, we have to dry out ambiguities about what we mean by “pipeline”. So let’s review the definition of six terms in our problem domain: pipeline, system and environment; stages, protocols and releases, just to make sure we’re on the same page.

The purpose of a pipeline is to regulate the flow of a software system from initial commit through stages, eventually going live. Pipelines deliver systems.

A system is the network of interdependent components which work together to achieve the goals of the system. Systems provide value to customers. While projects may be focused on a component of the system, deliveries are always made in the context of the system as a whole.

An environment refers to the infrastructure, configuration, code and services needed for the customer experience, all of these things, together. While you might get away with limited support in a feature branch environment, pipeline stages should be functionally complete environments.

A set of environments become the “stages” of a pipeline when they are ordered, and when they are associated with the protocols that govern how changes are made to that environment. The protocols for merging code to production are of course more robust than those for merging code to the initial development stage.

Protocols are the set of policies, permissions and heuristics that facilitate and govern deployment of code and related assets to a given stage. The protocols are a really big part of what gives meaning and context to a pipeline.

The goal of a pipeline is a production release, but deployment to pre-production pipeline stages also constitutes a release. If you don’t respect release protocols in pre-production, you don’t really have a pipeline, you just have a bunch of automation. Think of the protocols as contracts and the release event is executing a contract.

The protocols should be progressively stronger going down the pipeline. Nobody opens a theater production without a dress rehearsal, and you shouldn’t go live without one either. Make production release dress rehearsals a routine part of your process by simply applying the full production protocols to the last pre-production stage.

Automated rollbacks are one of the best investment you’ll ever make in devops, and hardly anyone takes the trouble to do it. Building and testing production release rollbacks is a lot like buying flood insurance, everyone thinks they won’t need it and when they do, it’s too late. Just keep in mind that building rollbacks into your pipeline without testing them is likely to be worse than having none at all.

Those are proposed operational definitions to six key terms commonly used in delivery practice. Its not important that you agree with how I’ve defined these terms, but it is essential that you have clear shared understanding of these terms in your organization. The whole point of all of this stuff is not to build a pipeline “correctly”, whatever that means, but to “satisfy the customer … through the early and continuous delivery of valuable software.”, whatever it takes to realize that.

Martin Fowler put it this way.

One of the challenges of an automated build and test environment is you want your build to be fast so that you can get fast feedback, but comprehensive tests take a long time to run.

A deployment pipeline is a way to deal with this by breaking up your build into stages. Each stage provides increasing confidence, usually at the cost of extra time. Early stages can find most problems yielding faster feedback, while later stages provide slower and more through probing.

Using pipelines in this way are a central part of Continuous Delivery.

Think about that phrase “Each stage provides increasing confidence”. Think of the stages of a pipeline as bolted together. The bolts are the protocols and Quality Assurance contracts provided by one stage to the next, all the way to production.

Here’s template for a common 4 stage pipeline. A pipeline can have 2 or 10 stages, whatever is needed for your system, but a 4 stage configuration like this one is common.

Commit, Test, Showcase, Production; but if you called them Dev, QA, UAT and Live, it’d be the same thing. Either way, they represent and ordered set of environments leading to production.

The purpose of the first stage feature branches integration. Having automated testing run against each commit to the integration commit is called “Continuous Integration”. Note that running automated testing against feature branches doesn’t qualify as CI, because, but feature branch by definition means that the work hasn’t yet been integrated. The CI test suite has to complete quickly, no more than a few minutes, preferably less. Smoke testing code is all that needed here. Don’t get caught up with comprehensive testing here.

The second stage is for consolidating some group of commits to run more extensive testing. Thise stage will likely not be customer facing, since there will often be feature incomplete work. This stage is the happy hunting grounds of feedback. Take advantage of it by making frequent releases, and concentrate QA efforts here.

The third stage is typically the first customer facing stage. It should be as production-like an environment as possible, in terms of the environment as well as the protocols. Using production release protocols on this stage gives you regular opportunities to practice going live.

Recall that an environment becomes a pipeline “stage” when it is a member of the ordered set of environments leading to production, and when it is associated with the protocols that govern how changes are made to that environment. Those attributes can be thought of as the stage metadata.

The metadata of each stage is: 1.) environment name, and a statement of the principle objective for that stage 2.) the role of who has authority to merge, and why 3.) the protocols for deploying the the stage 4.) the conditions of success indicating that code is eligible for promotion to the next stage.

Here are few things to look for a pipeline health check:

Here are 3 things you can look at to try to tell how well your time machine is working.

1.) Are you really testing integration?

2.) Do you understand your stage objectives?

3.) Do you understand your stage KPIs?

So first, are you really testing integration?

Continuous Integration, or CI, is the first component of Continuous Delivery practice, where the software is built every time code is committed.

A principle value of CI is fast feedback, so when you have pipelines where the the dev instance is unattended in broken state, then it’s clear that whatever your devops is doing for you, it isn’t really CI.

Jez Humble remarked “There are two words in CI: Continuous and Integration”. “Continuous” means “more often than you think” and “Integration” the merge to the code branch that will go live. Testing against feature branches has value, but do not confuse it with integration testing.

Pipeline Objectives and KPIs are expressed as the protocols and testing gates of each stage, respectively. As Yogi Barra said, “If you don’t know where you’re going, you’ll end up someplace else”. The pipeline is an abstraction to help us end up where we’re going.

Another Leaky Abstraction

Joel Spolsky’s law of leaky abstractions applies to the pipeline metaphor: “All non-trivial abstractions, to some degree, are leaky.” Software delivery is certainly non-trivial. Even the most sophisticated petroleum pipeline networks have nothing like the dependency issues of software systems. The pipeline is a good metaphor, but don’t be surprised to find that all of the hard work of building software systems doesn’t fit neatly in the abstraction.

Lean theory is another leaky abstraction. Agile practice is built on a foundation of Lean, which is a manufacturing domain methodology. There are many valuable lessons to be learned from Six Sigma, the Toyota Production System and Lean Practice, but don’t loose site of the fact that software systems represent a distinct problem domain. Parallels from the manufacturing domain are good talking points, but don’t fall into the trap of thinking that software can be built in the same way as an assembly line can be run. It doesn’t work that way.

An abstraction from Lean theory the does map very well to the software domain is the concept of Cycle Time as a key health metric of delivery practice. Cycle Time starts when a story goes into work and ends with delivery to production. It is the foundation to understanding flow efficiency.

Flow efficiency is the relationship of actual time-on-task to cycle time. Time on task is time team members actually spend dedicated to a particular story. A story with 2 days of time-on-task may have a cycle time of weeks or longer before it finally lands in production. Improving flow efficiency often affords the greatest opportunities to improve productivity in the software engineering: your proverbial low-hanging fruit. What happens while work-in-progress is not being worked on turns out to be a big deal.

In contrast, manufacturing operations generally already have high flow efficiency, and thus Lean practitioners emphasize other optimizations. For example, lean practice tends to focus first on the elimination of wasteful steps, because that’s where the low hanging fruit is in the assembly operations, in the physical domain. But in the software domain, where flow efficiency is very low, this kind of optimization has a negligible impact on productivity, because it targets actual time on task, which we’ve already noted is small. Improvements in reducing time on task will often not even register in cycle time. This doesn’t mean that those optimizations are valueless, it just means that they’re not as important as other inefficiencies that you should be focusing on first. It’s a question of priorities. Let cycle time be the judge. Instead of focusing on time-one-task, focus first on reducing time between task. Your reward will be less work in progress, greater throughput, and of course, increased flow efficiency.

The main point here is that building software systems is not like running manufacturing operation. If you take lean practice too literally in software development, you’ll end up with some very leaky abstractions indeed, hardly a way to build a competitive position.

Continuous Delivery practice is built on a solid lean foundation but it requires a disciplined approach to a number of software system specific problems, including branch management, merge policies, configuration management, dependency management, feature toggling, test gate specification, and roll back planning.

The canonical reference to the practice is still Jez Humble and David Farely’s 2010 book “Continuous Delivery”. The more recent book “Accelerate” is the product of the most comprehensive survey of software delivery practice every attempted, and is a useful source for making the case to senior management on the benefits they can expect to get from the investment in devops and delivery practice.

Support from management is important, but it’s not about the spend. Having your pipeline protocols written down on the back of a napkin can be of more value than that achieved by many big budget devops initiatives. Start by writing down your stage release protocols and success criteria. Making your protocols explicit in this way is more important than automation.

Software delivery is not primarily a problem of technology. Technology is just the tooling, but the tooling won’t help if you don’t understand the problem clearly. Sakashi Toyoda’s legacy teaches us that success is based on fostering a culture of continual improvement.

Taiichi Ohno’s legacy teaches us to hang our signaling mechanisim of a framework, and let quality regulate time. Continuous Integration places feedback triggers as close as possible to the source of problems to limit harm and maximize the opportunity for improvement.

Deming’s legacy teaches us that the source of nearly all problems are to be found in the system, not in people whose hands are on the work when things go wrong; that the first order of business is to bring processes into statistical control, a prerequisite for understanding what aspect of the system to try to improve.

Walter Shewhart taught that it is pointless to try to establish control of a process without first agreeing on operational definitions of the key attributes of the process, especially those that define the boundaries between teams and transactions.

Jez Humble and David Farely have done a lot of the heavy lifting of putting all this in the context Software engineering practice.

When you consider the words ”…They do not have the expertise gained from the failures it took to produce the original…”, which side of that question do you want to be on? The ones who have learning from feedback at the center of their practice, or other guys, content with copying someone else’s best practices? The delivery pipeline is not just someplace where your code goes when it’s done, it’s the work bench on which teams build systems.

Fredrick Windslow Taylor’s discredited approach to efficiency still casts a long shadow over software practice. The outlook of Sakashi Toyoda is better suited to the challenges of building and delivering complex software systems then the tired ideas of Taylorism.

We’re not in the business of writing code any more than a factory is in the business of turning bolts. We write code, but we’re in the business of building complete, complex software systems. How we manage delivery practice is perhaps the single most important aspect of our value proposition to the customer.

Organizations that leverage delivery practice to foster continual learning and innovation gain a significant competitive advantage. In challenging times, like these, it could easily be the margin of success.

David Hofmann — "Angel Dance"

Let's agree to define productivity in terms of throughput. We can debate the meaning of productivity in terms of additional measurements of the business value of delivered work, but as Eliyahu Goldratt pointed out in his critique of the Balanced Scorecard, there is a virtue in simplicity. Throughput doesn’t answer all our questions about business value, but it is a sufficient metric for the context of evaluating the relationship of practices with productivity.