Agile Calibration

Why did inspections fail to detect excessive wear?

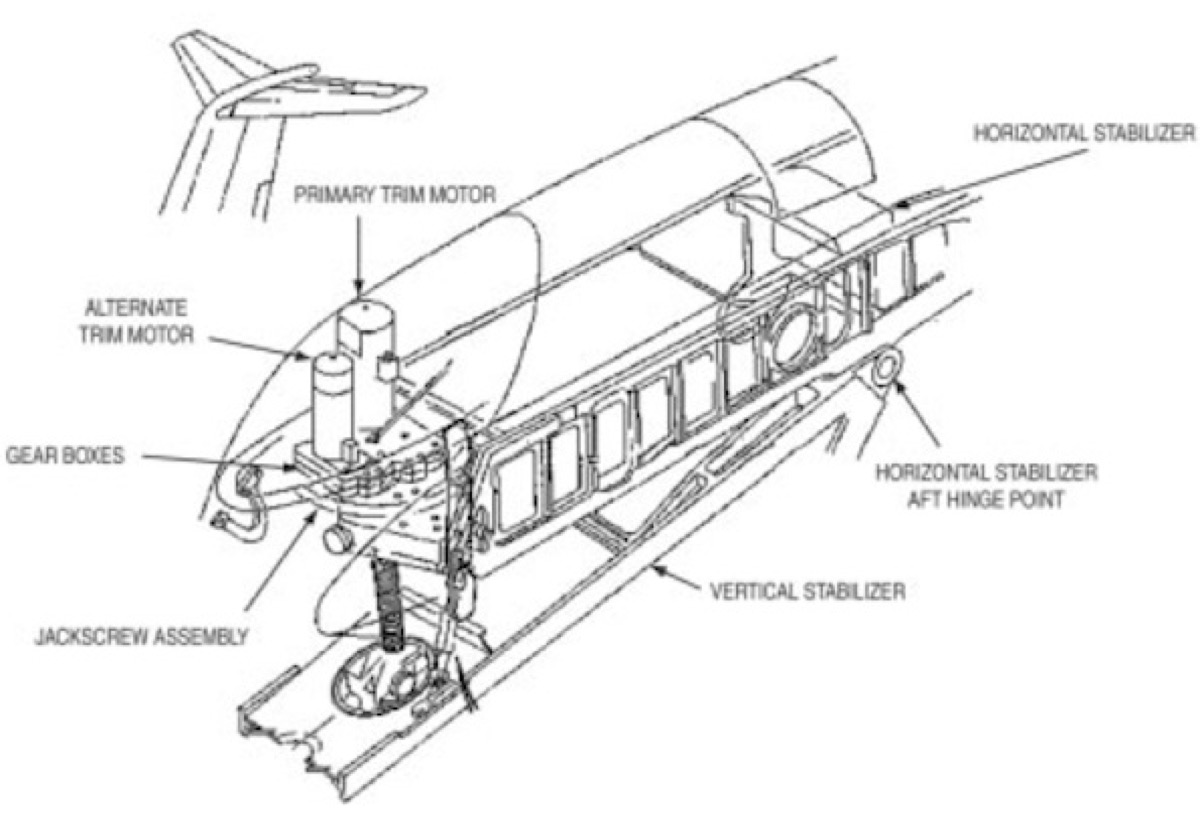

On January 31st 2000, an Alaska Air MD-80 disappeared into the Pacific ocean off the coast of Santa Barbara, California with 88 souls aboard. The crash investigation determined the cause to be…

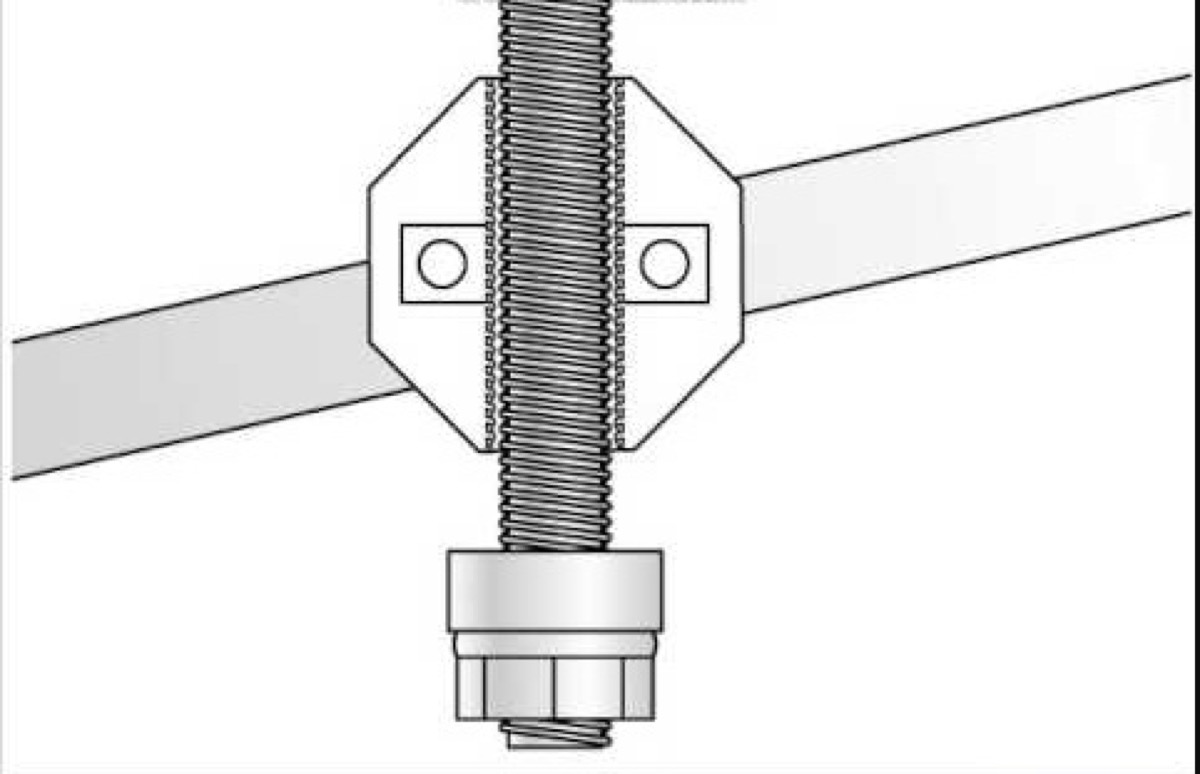

“…a loss of airplane pitch control resulting from the in-flight failure of the horizontal stabilizer trim system jackscrew assembly’s Trapezoidal nut threads. The thread failure was caused by excessive wear resulting from … insufficient lubrication of the jackscrew assembly.”

Why did inspections fail to detect the excessive wear?

Root Cause Analysis

Excessive wear is not something that happens all of the sudden, it takes time, and time implies opportunities to detect and remediate. The crash investigators had to dig deeper.

The maintenance engineering specification for the MD-80 stabilizer properly identified the nature of the risk, and proscribed an adequate inspection process for periodic evaluation of thread wear. Maintenance records showed compliance with the procedure at specified intervals. Trained and certified to work on MD-80s, mechanics inspected the stabilizer and approved the plane to return to service despite the fact that the jackscrew threads were dangerously worn. So what went wrong?

The weak link in this tragic chain of events was that the tool used to measure thread wear had not been calibrated before use. The thread measurement reading did not reflect the actual thread wear.

What is Calibration?

Calibration is a process where we measure against a known quantity so that afterwards, when we measure an unknown, we can have confidence in the results.

— Douglas Hubbard

Before a scale is certified for commercial transactions, it is calibrated to a known set of weights, so when our turnips are in the balance, we can be confident that we’re getting what we’re paying for. When we allocate budget for an Agile implementation, how do we validate that the process we’re buying is producing value for the business?

In God we trust; all others bring data.

— W. Edwards Deming

Calibration is about establishing the credibility of the data that we use to support our claims of value delivered. To begin to unpack what it means to calibrate an Agile initiative, we need to put some thought into what is the process and how we’re measuring success.

What is Agile Calibration?

“Agile Calibration” is a bit of a ruse, because “Agile” isn’t an objective, not something to be measured. Outcomes are all that anyone is really interested in; at least anyone who really cares about the business. We don’t measure practices, we measure outcomes. Regardless of what you think the Agile best practices are, Agile is really mostly a promise to make things better, to improve outcomes. So let’s measure the outcomes and calibrate the measurements.

Calibration is just how we go about checking up on our data, to make sure that the Scrum Master’s finger isn’t tipping the scale. It’s not magic. An honest pursuit of objective improvement and a healthy skepticism for reported metrics is a good starting point. A respect for math is an invaluable ally in trying to get to the bottom of things. But pulling metrics from an unstable system is worse than no metrics at all.

What are we Calibrating?

If you can’t describe what you’re doing as a process, then you don’t know what you’re doing.

— W. Edwards Deming

The first order of business is demonstrating some baseline of stability of the system. In some ways, qualitative measurements can be better suited then numeric and derivative measures. I’ll develop what that means in subsequent posts; for our purposes here, let’s just say that qualitative attributes such as the observation of behaviors can be used to help establish the stability of the system.

Fools rush in, where angels fear to tread

— Alexander Pope

The impulse to collect numeric metrics is nearly irresistible and premature attempts to gather data of this type can be damaging when decisions are based on the noisy results you can expect from an unstable system. First establish a shared understanding of what a stable system looks like for you, in your context. Do a gap analysis and iterate until you can demonstrate stability. Only then will system metrics be potentially meaningful.

Are you getting what you're paying for?

To answer that question, we need to start with relevant metrics from a stable system; then we will be in a position to discuss what productivity means for you and how to evaluate if you’re improving on it. If your Agile initiative is not objectively improving productivity, then you’re paying for something that you’re not getting, and losing time in bargain.

Launde Morel — "Cockpit at Night"

Let's agree to define productivity in terms of throughput. We can debate the meaning of productivity in terms of additional measurements of the business value of delivered work, but as Eliyahu Goldratt pointed out in his critique of the Balanced Scorecard, there is a virtue in simplicity. Throughput doesn’t answer all our questions about business value, but it is a sufficient metric for the context of evaluating the relationship of practices with productivity.