The Entropy of Information

a measure of uncertainty

Information entropy is the average amount of information conveyed by an event when considering all possible outcomes.

When you toss two coins, the information entropy is the base-2 logarithm of the number of possible outcomes. 2 coins, four possible outcomes, 2 bits of entropy.

The basic idea of information theory is that the more one knows about a topic, the less new information one is apt to get about it: the fidelity of the signal. The converse is what I refer to as leveraging uncertainty in estimation: the less one knows about the possible outcome of a topic, the easier it is to obtain new information that will reduce uncertainty.

Consider the question: among all IT department personnel, what is that average about of time that people spend in meetings during a regular work week? What is the entropy of that question? How many people would we have to survey to have a statistically significant result? Two-thirds of them? Half of them? How about if we just asked five people at random?

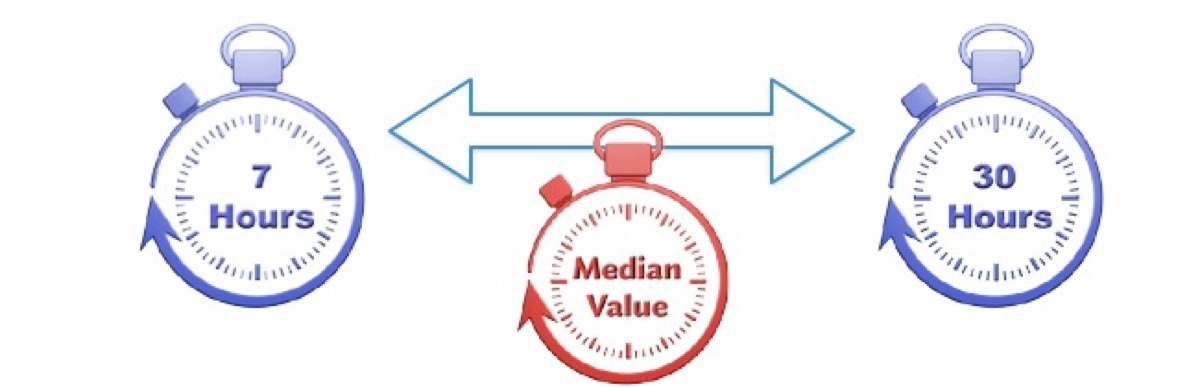

How much would that new information reduce the entropy of our question? What is the probability that the actual median of weekly meeting time for everyone falls within the range bounded by the highest and lowest values from our random sample of five?

The answer is that there is a greater than 90% probability the actual median falls in the range bounded random sample of five. Ask me about the math later. The point is, when your prior state of uncertainty is high, such as it was in this example, a surprisingly small effort will often yield a useful outcome in reducing uncertainty.

Our measurement doesn’t have to vanquish uncertainty to be valuable; it just needs to reduce it. We don’t learn the actual average meeting time with a random sample of five, just the range that will likely contain the median value: more than we knew before and a baseline for further inquiry.

Let's agree to define productivity in terms of throughput. We can debate the meaning of productivity in terms of additional measurements of the business value of delivered work, but as Eliyahu Goldratt pointed out in his critique of the Balanced Scorecard, there is a virtue in simplicity. Throughput doesn’t answer all our questions about business value, but it is a sufficient metric for the context of evaluating the relationship of practices with productivity.